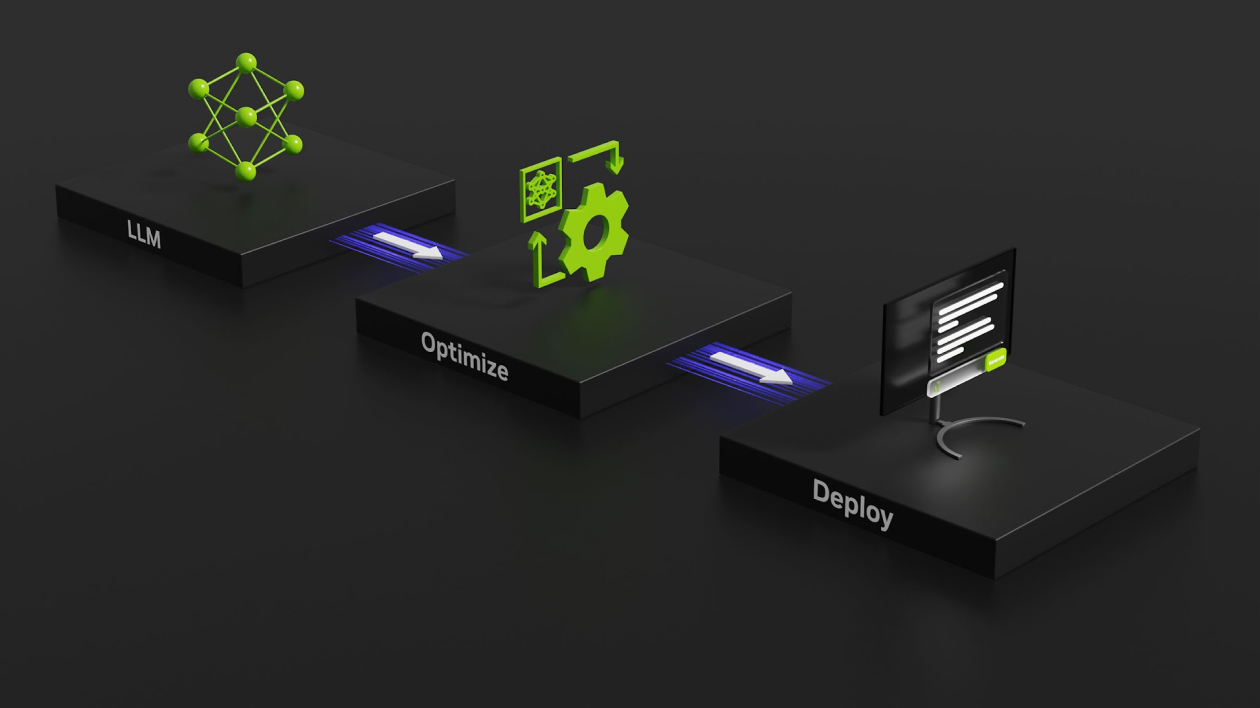

Over the past few years Generative AI models have popped up everywhere - from creating realistic responses to complex questions, to generating images and music to impress art critics around the globe. However, there are still some users or businesses who cannot use Generative AI due to limited resources, high cost for compute power or simply overweight their business goals. In this project, we enable them to quantize almost any AI model into different sizes and build an optimized inference engine using TensorRT-LLM. You will see how to quantize a model into one of the quantization format (qformat) such as fp8, int4_sq, int4_awq and many more.

From there, you will see how to build an inference engine using Nvidia TensorRT-LLM. If you are interested in more detailed explanation for building optimized inference engine, you can take a look at official documentation here. After that, you might want to get your hands on your favourite AI model and quantize it to integrate into your own applications. What model it might be? Llama? Nemotron? Let's start immediately!

- Operating System: Ubuntu 22.04

- CPU: None, tested with Intel Core i7 7th Gen CPU @ 2.30 GHz

- GPU requirements: Any NVIDIA training GPU, tested with 1x NVIDIA GTX-16GB

- NVIDIA driver requirements: Latest driver version (with CUDA 12.2)

- Storage requirements: 40GB

This section demonstrates how to use this project to run NVIDIA NIM Factory via NVIDIA AI Workbench.

- Huggingface account: Get a username and token to download models. (some models might require access permission)

- Enough memory for storing downloaded models.

-

Install and configure AI Workbench locally and open up AI Workbench. Select a location of your choice.

-

Fork this repo into your own GitHub account.

-

Inside AI Workbench:

- Click Clone Project and enter the repo URL of your newly-forked repo.

- AI Workbench will automatically clone the repo and build out the project environment, which can take several minutes to complete.

- Upon

Build Complete, select Open Backend-app on the top right of the AI Workbench window, after that, select Open Frontend-app to interact with application in browser. - OR go to Environment section of Workbench and start 1) backend-app , after, 2) frontend-app .

-

In the Frontend-app:

-

Choose your desired family model, such as Llama, Nemotron, GPT etc. (Note: follow the order of tabs, for example, in our case, we chose "GPT" model family. After that, we are offered available model versions described in "Support Matrix" of TensorRT-LLM documentation.

Hugging Face credentials are optional as long as model repository will not require special permission as Llama does. So, make sure you obtained permission if you want to select Llama model family. After, click on Prepare Environment button on the top middle. If everything gets installed successfully, we will see how TensorRT-LLM text on the right side becomes green, otherwise, it will turn to red color.

-

Click on TensorRT-LLM tab next to Environment to proceed to our tensor operations.

Choose one of the available quantization format offered by TensorRT-LLM itself. In our case, we decided with int4_awq format. Default values and their description are given for each parameter. (In the future, we will provide more range of parameters). After that, click on Start Quantization button to start it and observe the Quantization Window to know the progress of your operation. If quantization is successfull, we will see a model directory in our "models" folder, such as "quant_gpt2_int4_awq" folder and output at the end of the window as below:

Inserted 147 quantizers Caching activation statistics for awq_lite... Searching awq_lite parameters... Padding vocab_embedding and lm_head for AWQ weights export current rank: 0, tp rank: 0, pp rank: 0

-

Next, click on Build Engine tab to start building our inference engine.

Note: parameter max input lenght value must not exceed the value of max_position_embeddings in config.json file of the model, otherwise, you will get an error. After, click on Start Engine Building to start the process. Watch closely the Build Window if you get any error during build process. If engine builds successfully, you will see such output at the end:

[03/12/2024-10:21:08] [TRT] [I] Engine generation completed in 35.9738 seconds. [03/12/2024-10:21:08] [TRT] [I] [MemUsageStats] Peak memory usage of TRT CPU/GPU memory allocators: CPU 212 MiB, GPU 775 MiB [03/12/2024-10:21:08] [TRT] [I] [MemUsageChange] TensorRT-managed allocation in building engine: CPU +0, GPU +775, now: CPU 0, GPU 775 (MiB) [03/12/2024-10:21:09] [TRT] [I] [MemUsageStats] Peak memory usage during Engine building and serialization: CPU: 6600 MiB [03/12/2024-10:21:09] [TRT-LLM] [I] Total time of building Unnamed Network 0: 00:00:36 [03/12/2024-10:21:09] [TRT-LLM] [I] Serializing engine to trtllm_quant_gpt2_int4_awq/trtllm-engine/trrank0.engine... [03/12/2024-10:21:11] [TRT-LLM] [I] Engine serialized. Total time: 00:00:02 [03/12/2024-10:21:11] [TRT-LLM] [I] Total time of building all engines: 00:00:41

-

After successfully downloading, quantizing model and building inference engine, paths of each model are saved in

model_paths.jsonfile which is located incode/nim-factory-ui/backendpath of the project. In our case, our file should look like this:{ "base_models": { "gpt2": "/models/gpt2" }, "quant_models": { "quant_gpt2_int4_awq": "/models/quant_gpt2_int4_awq" }, "trtllm_engines": { "trtllm_quant_gpt2_int4_awq": "/models/trtllm_quant_gpt2_int4_awq" } }It can be used to track all the models when application is deployed to remote servers.

-

Here, you have to check your "models" folder for existence of inference engine which starts with "trtllm...". If it does, you can run the next command manually to interract with model:

python3 run.py --engine_dir models/trtllm_quant_gpt2_int4_awq

At the moment, Run Engine tab is under active development which enables users to comfortably have a chat with their inference engine.

Link: video link

Check out my achievement and project submission to the hackathon: hackathon_submission_link

This project is a demonstration of first steps to building your own "NIMs". A lot is required to do to improve the application, such as advanced error handling, enabling more features of TensorRT-LLM and more. We actively developing Run Engine tab of the application to enable users to interact with built engine despite that we experience the hardware resource shortage at the moment. The application serves as a good stepping stone to those who want to discover the power of TensorRT libraries and take advantage of it.

-