-

Notifications

You must be signed in to change notification settings - Fork 91

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Implement CO2Brine model on GPU #1325

Conversation

| /// Kernel wrapper for brine viscosity updates | ||

| PVTProps::BrineViscosity::KernelWrapper const m_brineViscosityWrapper; | ||

|

|

||

| /// Kernel wrapper for CO2 viscosity updates | ||

| PVTProps::FenghourCO2Viscosity::KernelWrapper const m_co2ViscosityWrapper; | ||

|

|

||

| /// Kernel wrapper for brine density updates | ||

| PVTProps::BrineCO2Density::KernelWrapper const m_brineDensityWrapper; | ||

|

|

||

| /// Kernel wrapper for CO2 density updates | ||

| PVTProps::SpanWagnerCO2Density::KernelWrapper const m_co2DensityWrapper; | ||

|

|

||

| /// Kernel wrapper for phase fraction and phase component fraction updates | ||

| PVTProps::CO2Solubility::KernelWrapper const m_co2SolubilityWrapper; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hello @klevzoff,

On Lassen, I struggled with the data members in the update class of the new CO2-brine model (currently called NewMultiPhaseMultiComponentFluid):

- In the current version of this PR, the data members are the wrappers of the PVTFunctions, such as, for instance,

PVTProps::BrineViscosity::KernelWrapper const m_brineViscosityWrapper;. But, when I try to compile on Lassen, I get a compilation error saying:

Error: Formal parameter space overflowed

located where we launch the fluid update (unfortunately I forgot to save the exact error message, I do this in another branch where I remove the non-GPU callable fluid models from the CMakeLists.txt...). I found a thread online saying that this error might be due to the way I pass the data to the GPU, so I tried

something else (see point 2 below).

- If I modify the update class to store views on the wrappers of the PVTFunctions:

/// Kernel wrapper for brine viscosity updates

arrayView1d< PVTProps::BrineViscosity::KernelWrapper const > const m_brineViscosityWrapper;

/// Kernel wrapper for CO2 viscosity updates

arrayView1d< PVTProps::FenghourCO2Viscosity::KernelWrapper const > const m_co2ViscosityWrapper;

/// Kernel wrapper for brine density updates

arrayView1d< PVTProps::BrineCO2Density::KernelWrapper const > const m_brineDensityWrapper;

/// Kernel wrapper for CO2 density updates

arrayView1d< PVTProps::SpanWagnerCO2Density::KernelWrapper const > const m_co2DensityWrapper;

/// Kernel wrapper for phase fraction and phase component fraction updates

arrayView1d< PVTProps::CO2Solubility::KernelWrapper const > const m_co2SolubilityWrapper;

then I can compile and run the CO2-brine simulations on Lassen, and everything seems to work fine.

However, this forces me to have these arrayView1ds of size 1 in the update class, which is a little odd. What do you think would be a good way to solve this issue?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

According to https://docs.nvidia.com/cuda/cuda-c-programming-guide/index.html#function-parameters the total size of kernel arguments must not exceed 4 KB. We haven't hit this limit before, but it seems this object may indeed be quite large (if you add up the sizes of all TableKernelWrappers and other members in its subobjects). Out of curiosity, can you print out sizeof(NewMultiPhaseMultiComponentFluidUpdate) somewhere?

An ArrayView of size 1 may be (much) smaller than its contained value, so I'm not surprised that it helps. This may be a reasonable workaround if it's not possible to reduce the size of the object. The only problem is that you also need to place the underlying Array object somewhere (inside NewMultiPhaseMultiComponentFluid?). I suppose we could also develop a host-device version of unique_ptr to provide a slightly lighter interface to manage a single object across memory spaces.

@corbett5 @rrsettgast thoughts?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Interesting. Yeah I'm not a huge fan of the arrayView1D containing a single object. A unique_ptr would be cleaner, but it wouldn't much easier to use than an array containing a single value. It would still need an owning class somewhere and a view class.

I wonder if captured variables count towards the parameter limit.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I wonder if captured variables count towards the parameter limit.

I think this is exactly what happens here. The captured-by-value object is a member of the capture object and contributes to its size. I wonder if RAJA could work around this issue on their side by dynamically allocating device memory for the device-side copy of the lambda, rather than passing it directly by-value through the CUDA launch API (conditionally, only when sizeof(LAMBDA) above limit). I don't know if copy-constructing an object into the device memory is possible without CUDA driver's "magic" though. Maybe UM is an option for this. Might be worth opening an issue with them.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

can you print out

sizeof(NewMultiPhaseMultiComponentFluidUpdate)somewhere?

-

When I do

sizeof(NewMultiPhaseMultiComponentFluidUpdate)before launching the kernel on the branch that has the size-1arrayView1ds (see here), I get: 2.336 KB and it seems to work well. -

When I try to compile on Lassen with the current version of this PR (no

arrayView1dto store theKernelWrappers), I get the following error message that, I think, contains the size of the kernel (5.920 KB):

/usr/WS1/GEOS/GEOSX/TPLs_2021-01-19/install-lassen-clang@upstream-release/raja/include/RAJA/policy/cuda/forall.hpp(137):

Error: Formal parameter space overflowed (5920 bytes required, max 4096 bytes allowed) in function

_ZN4RAJA6policy4cuda4impl18forall_cuda_kernelILm256ENS_9Iterators16numeric_iteratorIllPlEEZN5geosx34CompositionalMultiphaseFlowKernels17FluidUpdateKernel6launchINS1_9cuda_execILm256ELb0EEENS8_12constitutive38NewMultiPhaseMultiComponentFluidUpdateEEEvlRKT0_RKN7LvArray9ArrayViewIKdLi1ELi0ElNSJ_10ChaiBufferEEESP_dRKNSK_ISL_Li2ELi1ElSM_EEEUllE_lEEvT1_SG_T2_

And yes I forgot to say that for the version of 1) with the size-1 arrayView1ds I keep an array1d in NewMultiPhaseMultiComponentFluid here.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Some stupid shallow comments

src/coreComponents/constitutive/fluid/NewMultiPhaseMultiComponentFluid.cpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/NewSpanWagnerCO2DensityFunction.cpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/NewFlashModelBase.hpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/NewFenghourCO2ViscosityFunction.cpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/NewCO2SolubilityFunction.cpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/NewBrineCO2DensityFunction.cpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/NewBrineCO2DensityFunction.cpp

Outdated

Show resolved

Hide resolved

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Comments no to forget them. (we'll forget them, but at least they are somewhere).

src/coreComponents/constitutive/fluid/MultiFluidPVTPackageWrapper.hpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/BrineCO2DensityFunction.cpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/BrineCO2DensityFunction.cpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/FenghourCO2ViscosityFunction.cpp

Outdated

Show resolved

Hide resolved

src/coreComponents/physicsSolvers/fluidFlow/CompositionalMultiphaseFVM.cpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/PVTFunctionHelpers.hpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/BrineCO2DensityFunction.cpp

Outdated

Show resolved

Hide resolved

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Still a concern about the indices that are hard coded (e.g. around the inputPara or in #1325 (comment))

src/coreComponents/constitutive/fluid/PVTFunctions/FenghourCO2ViscosityFunction.cpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/FenghourCO2ViscosityFunction.cpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/SpanWagnerCO2DensityFunction.cpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/CO2SolubilityFunction.cpp

Outdated

Show resolved

Hide resolved

|

In this PR, one last thing that I have to resolve is the type selection for the To do that, an example that I was planning to follow is the selection of the Besides that, I think the PR is in good shape--provided that we are ok with the |

src/coreComponents/constitutive/fluid/PVTFunctions/FenghourCO2ViscosityFunction.cpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/PVTFunctionHelpers.hpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/PVTFunctionHelpers.hpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/SpanWagnerCO2DensityFunction.cpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/CO2SolubilityFunction.cpp

Outdated

Show resolved

Hide resolved

|

@francoishamon Have we reached a decision on whether we'll try to make this a proper multiphase model?

|

Assuming we stick to the two-phase model (for now at least), I wouldn't use the SFINAE-based pattern for this, it's too verbose. Instead we can delegate to a helper template that will be specialized for the supported combinations of functions. So in template< typename P1DENS, typename P1VISC, typename P2DENS, typename P2VISC, typename FLASH >

class MultiPhaseMultiComponentFluid

{

// ...

static string catalogName(); // leave implementation to cpp file

// ...

};

// this alias will be useful in constitutive dispatch

using CO2BrineFluid = MultiPhaseMultiComponentFluid< PVTProps::BrineCO2Density,

PVTProps::BrineViscosity,

PVTProps::SpanWagnerCO2Density,

PVTProps::FenghourCO2Viscosity,

PVTProps::CO2Solubility >;Then in namespace

{

template< typename P1DENS, typename P1VISC, typename P2DENS, typename P2VISC, typename FLASH >

TwoPhaseCatalogNames;

template<>

TwoPhaseCatalogNames< PVTProps::BrineCO2Density,

PVTProps::BrineViscosity,

PVTProps::SpanWagnerCO2Density,

PVTProps::FenghourCO2Viscosity,

PVTProps::CO2Solubility >

{

static string name() { return "CO2BrineFluid"; }

}

} // end namespace

// provide a definition for catalogName()

template< typename P1DENS, typename P1VISC, typename P2DENS, typename P2VISC, typename FLASH >

string MultiPhaseMultiComponentFluid< P1DENS, P1VISC, P2DENS, P2VISC, FLASH >::catalogName()

{

return TwoPhaseCatalogNames< P1DENS, P1VISC, P2DENS, P2VISC, FLASH >::name();

}

// explicit instantiation of the model template; unfortunately we can't use CO2BrineFluid alias for this

template class MultiPhaseMultiComponentFluid< PVTProps::BrineCO2Density,

PVTProps::BrineViscosity,

PVTProps::SpanWagnerCO2Density,

PVTProps::FenghourCO2Viscosity,

PVTProps::CO2Solubility >;

// finally, register in the factory

REGISTER_CATALOG_ENTRY( ConstitutiveBase, CO2BrineFluid, string const &, Group * const )(as always, my code is "commentware", meaning it's not guaranteed to compile or link; also, I changed the order of template arguments) |

src/coreComponents/constitutive/fluid/PVTFunctions/PVTFunctionHelpers.hpp

Outdated

Show resolved

Hide resolved

@klevzoff thanks very much for the suggestion, it is very useful. For the first question about two-phase vs true multiphase, I think it is more reasonable to stick two-phase for now since this is just a refactoring PR, and this is what the original class was doing. I think the plan is to have a true compositional CO2 model this year, but I don't know enough details about this future work to know whether it will fit in this class or not. Regarding the code that you sent, that is very impressive that you are able to write such complex code just on top of your head :) And it works (at least, on Lassen it works, we will see on Travis)! I copy pasted what you sent and I removed the brine and CO2 words from the variable names in my original code. Now the last point that makes this class not fully generic is the presence of the keywords |

src/coreComponents/constitutive/fluid/MultiPhaseMultiComponentFluid.hpp

Outdated

Show resolved

Hide resolved

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Great work!

Just some final comments after I looked at all of it (sorry!)

src/coreComponents/constitutive/fluid/PVTFunctions/SpanWagnerCO2DensityFunction.hpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/FenghourCO2ViscosityFunction.hpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/CO2SolubilityFunction.hpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/BrineViscosityFunction.hpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/BrineCO2DensityFunction.hpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/MultiPhaseMultiComponentFluid.cpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/PVTFunctionHelpers.hpp

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/PVTFunctionHelpers.hpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/PVTFunctionHelpers.hpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/PVTFunctionHelpers.hpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/PVTFunctionHelpers.hpp

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/PVTFunctions/CO2SolubilityFunction.cpp

Outdated

Show resolved

Hide resolved

src/coreComponents/constitutive/fluid/MultiPhaseMultiComponentFluid.hpp

Outdated

Show resolved

Hide resolved

|

Hello @CusiniM,

My preference goes to either 1 or 3. Let me know what you think. |

I think you should merge this PR since it's ready. We can then go for either option 1 or 2. I do not have any real preference. |

| // Note: in PVTPackage, derivatives are obtained with finite-difference approx | ||

| // The component fraction is perturbed (just as above), and then all the component fractions are normalized (as below) | ||

| // But, in the native DO model and in CO2Brine, derivatives are computed analytically. Say, in the 2-phase DO, that you want | ||

| // to compute the derivatives of phase fraction wrt component fraction. Analytically we have | ||

| // dPhaseFraction_dGlobalFraction[ic][jc] = (ic == jc) | ||

| // which is correct but will fail the test below. Instead, the finite-difference approx a la PVTPackage yields | ||

| // dPhaseFraction_dGlobalFraction[ic][jc] = (ic == jc) ? 1-composition[ic] : -composition[ic] | ||

| // because of the normalization. The second option is, in my opinion, not correct, unless I missed some | ||

| // cancellations that happen after when we differentiate wrt component densities. Hence the flag below. | ||

| if( usePVTPackage ) | ||

| { | ||

| // renormalize | ||

| real64 sum = 0.0; | ||

| for( localIndex ic = 0; ic < NC; ++ic ) | ||

| sum += compNew[ic]; | ||

| for( localIndex ic = 0; ic < NC; ++ic ) | ||

| compNew[ic] /= sum; | ||

| } |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Interesting issue. Though I don't fully understand the comment - you say the new (analytical) models have problems with this test, but you apply the re-normalization fix to the old (FD-based) models that used to work? Does this pass the test for both?

In any case, the problem seems to be applying a perturbation to a set of variables that aren't independent (constrained to unit sum). Perhaps the correct way to do this test (for both kinds of models) is to 1) apply the perturbation to one variable, 2) re-normalize, 3) compute "effective" deltas to each of the variables resulting from re-normalization, and 4) checking the numerical derivative w.r.t. all variables while taking into account the "effective" deltas (instead of dC, the original prescribed perturbation)?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes sorry for the very unclear comment, and part of it is outdated. The issue comes from the normalization that is used in this test and in PVTPackage in the FD derivatives calculations (the GitHub diff is misleading here, I only added the flag if( usePVTPackage ) to skip the normalization for the native models).

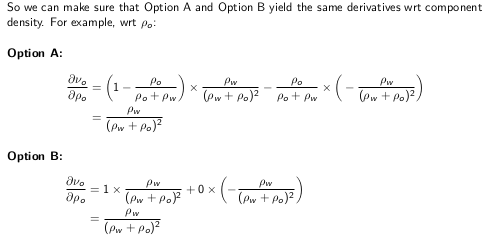

In PVTPackage the derivatives are obtained by 1) perturbing a given component fraction, and 2) re-normalizing so that the sum of the component fractions is equal to zero. With the normalization, the FD difference derivative wrt component fraction that is obtained is quite different from the analytical one. I tried to explain this for the phase fraction (equal to the component fraction in DO) in a two-phase model in the image below (not sure what GitHub will do with it):

So as long as the normalization is applied in PVTPackage, it makes sense to have it for the models implemented there. My impression (not checked yet, will let you know) is that if we use an effective "delta", we are not going to match the PVTPackage derivative (Option A), but instead, we are going to get the analytical one (Option B).

In develop, the reason why the native DO model passes the test without the flag if( usePVTPackage) is that I enforced the "FD difference derivative with normalization" in my implementation here: https://github.com/GEOSX/GEOSX/blob/d4ade8e7be45494ea619e18980f39d1b75309b7d/src/coreComponents/constitutive/fluid/DeadOilFluid.hpp#L705

But I find this way of computing the derivatives not very intuitive, so now I would like to change it in this PR here: https://github.com/GEOSX/GEOSX/blob/2a038ad3548fc77b5aeec138e27738bfe4cc09a6/src/coreComponents/constitutive/fluid/DeadOilFluid.hpp#L707

and if the normalization is used to check derivatives, testMultiFluid says that my derivative is wrong (hence the flag)

Eventually, what we care about are the derivatives wrt component density that we put in the Jacobian, and both (normalization or no normalization) approaches are equivalent, even if the intermediate derivatives wrt component fractions are quite different. There is indeed a cancellation of terms taking place in the chain rule:

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ok, I understand now, thanks for the detailed explanation! I agree that the new way of assigning dPhaseFraction_dGlobalCompFraction in DO is more intuitive (and mathematically correct, given that DO model itself does not apply input composition re-normalization, and leaves it to the user to adjust derivatives if they do so).

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

For this normalization issue, in the latest commit I tried to improve my explanation in the inline comment just above the if( usePVTPackage ) flag, and in this comment I reference this PR so people can view this discussion. For now, I left the flag that skips the normalization, but you are definitely right, this will have to be homogenized.

This PR modifies the existing CO2-brine model to make it run on the GPU. The main modifications are:

TableFunctionto replace the custom tables implemented insrc/coreComponents/constitutive/fluid/PVTFunctions/UtilityFunctions.hpp.Related to https://github.com/GEOSX/integratedTests/pull/125