You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

{{ message }}

This repository was archived by the owner on Oct 1, 2020. It is now read-only.

I am using Unet model for semantic segmentation. I pass a batch of images to the model. The model is expected to output 0 or 1 for each pixel of the image (depending upon whether pixel is part of person object or not). 0 is for background, and 1 is for foreground.

I am trying to quantize the Unet model with Pytorch quantization apis for ARM architecture. I chose Qnnpack as quantization configuration. However the model accuracy is very poor for both Post training static quantization as well as QAT. The output is always a complete black image i.e. contains only background, no foreground for the person object. The model outputs bX2X224X224 i.e. batch_size X 2 channels (one for forground and one for background) X height X width.

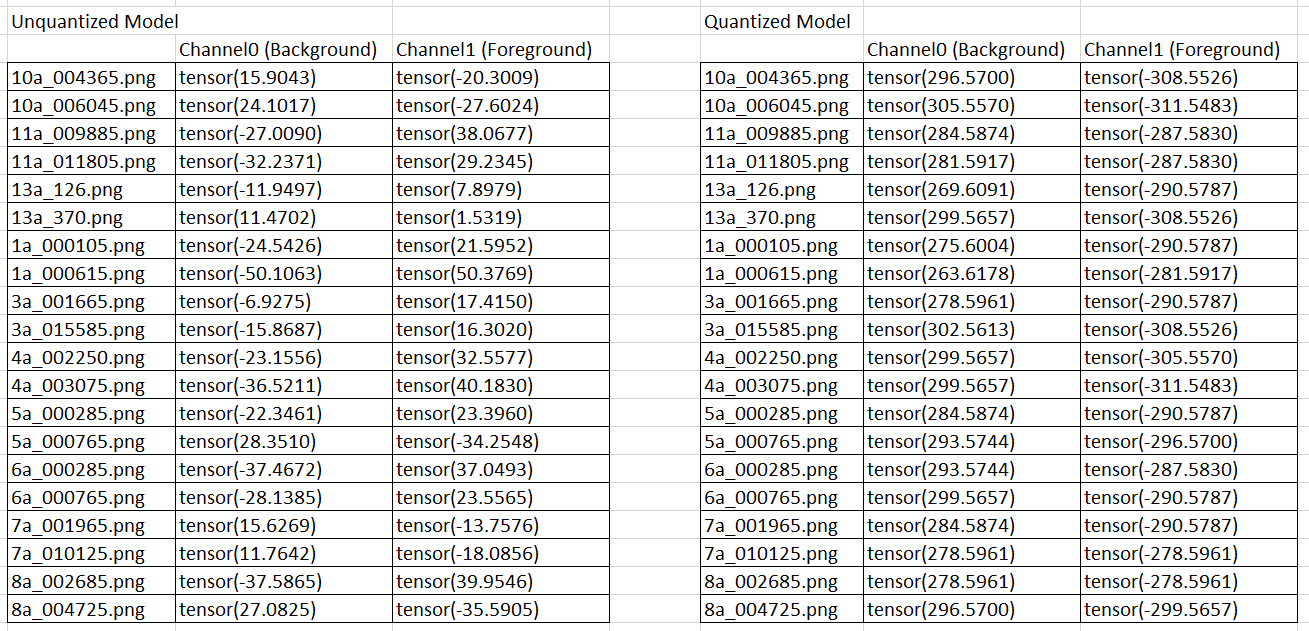

Following is the output values for the center pixels of images - with original model and with quantized model.

As seen, the original model has varying output values. Hence when we apply Softmax on dim=1 (i,e, Channel dimension), we get some pixels as 0 and some as 1. This is as per expectation. However, the quantized model always outputs high positive for background and high negative for foreground channel. After applying softmax, all the pixels are background pixels, and the output is black images.

I need some help to find why this is happening. Is it a bug in qnnpack quantization routine?

The model source code is available at here. I used pretrained version of Unet with MobileNetV2 as backbone (check benchmark section in the readme from the source code link) - see here.

I first tried with Fbgemm configuration, and it worked fine in terms of accuracy - no major loss. However, when tried with qnnpack, I face above issues. Following is my code for QAT.

use_sigmoid = False

def poly_lr_scheduler(optimizer, init_lr, curr_iter, max_iter, power=0.9):

for g in optimizer.param_groups:

g['lr'] = init_lr * (1 - curr_iter/max_iter)**power

def dice_loss(logits, targets, smooth=1.0):

"""

logits: (torch.float32) shape (N, C, H, W)

targets: (torch.float32) shape (N, H, W), value {0,1,...,C-1}

"""

if not use_sigmoid:

outputs = F.softmax(logits, dim=1)

targets = torch.unsqueeze(targets, dim=1)

# targets = torch.zeros_like(logits).scatter_(dim=1, index=targets.type(torch.int64), src=torch.tensor(1.0))

targets = torch.zeros_like(logits).scatter_(dim=1, index=targets.type(torch.int64),

src=torch.ones(targets.type(torch.int64).shape))

inter = outputs * targets

dice = 1 - ((2 * inter.sum(dim=(2, 3)) + smooth) / (outputs.sum(dim=(2, 3)) + targets.sum(dim=(2, 3)) + smooth))

return dice.mean()

else:

outputs = logits[:,1,:,:]

outputs = torch.sigmoid(outputs)

inter = outputs * targets

dice = 1 - ((2*inter.sum(dim=(1,2)) + smooth) / (outputs.sum(dim=(1,2))+targets.sum(dim=(1,2)) + smooth))

return dice.mean()

def train_model_for_qat(model, data_loader, num_epochs, batch_size):

device = torch.device('cpu')

model_params = [p for p in model.parameters() if p.requires_grad]

SGD_params = {

"lr": 1e-2,

"momentum": 0.9,

"weight_decay": 1e-8

}

optimizer = torch.optim.SGD(model_params, lr=SGD_params["lr"], momentum=SGD_params["momentum"],

nesterov=True, weight_decay=SGD_params["weight_decay"])

init_lr = optimizer.param_groups[0]['lr']

scheduler = lr_scheduler.StepLR(optimizer, step_size=100, gamma=1)

max_iter = num_epochs * np.ceil(len(data_loader.dataset) / batch_size) + 5

curr_batch_num = 0

for epoch in range(num_epochs):

print(f"Epoch {epoch} in progress")

train_data_length = 0

total_train_loss = 0

model.train()

for batch in data_loader:

# get the next batch

data, target = batch

data, target = data.to(device), target.to(device)

train_data_length += len(batch[0])

optimizer.zero_grad()

outputs = model(data)

loss = dice_loss(outputs, target)

total_train_loss += loss.item()

loss.backward()

optimizer.step()

curr_batch_num += 1

poly_lr_scheduler(optimizer, init_lr, curr_batch_num, max_iter, power=0.9)

total_train_loss = total_train_loss / train_data_length

scheduler.step()

if epoch > 10:

# Freeze quantizer parameters

model.apply(Q.disable_observer)

if epoch > 20:

# Freeze batch norm mean and variance estimates

model.apply(nn_intrinsic_qat.freeze_bn_stats)

quantized_model = Q.convert(model.eval(), inplace=False)

accuracy = eval_model_for_quantization(quantized_model, device)

print(f"...Accuacy at the end of epoch {epoch} : {accuracy}")

if (accuracy > 99) and (epoch >= 10):

print("...GUESS we are done with training now...")

break

return total_train_loss, model

Am I missing anything?

One issue that we did encounter is that the upsampling layers of Unet use nn.ConvTranspose2d which is not supported for quantization. Hence before this layer, we need to dequantize tensors, apply nn.ConvTranspose2d, and then requantize for subsequent layers. Can this be reason for lower accuracy?

#------------------------------------------------------------------------------

# Decoder block

#------------------------------------------------------------------------------

class DecoderBlock(nn.Module):

def __init__(self, in_channels, out_channels, block_unit):

super(DecoderBlock, self).__init__()

self.deconv = nn.ConvTranspose2d(in_channels, out_channels, kernel_size=4, padding=1, stride=2)

self.block_unit = block_unit

self.quant = QuantStub()

self.dequant = DeQuantStub()

def forward(self, input, shortcut):

# self.deconv = nn.ConvTranspose2d not supported for FBGEMM and QNNPACK quantization

input = self.dequant(input)

x = self.deconv(input)

x = self.quant(x)

x = torch.cat([x, shortcut], dim=1)

x = self.block_unit(x)

return x

The following is the model after QAT training is completed for 30 epochs . . .

Question - typically how many epochs are good enough for QAT fine tuning? And do we need to supply loads of images for training (like say 5000), or few can suffice (say 100)?

Question - suppose I performed QAT for say n epochs. Then (before calling torch.quantization.Convert) generated model output. Then called torch.quantization.Convert. And again generated output. Will the two outputs be same? How much gap is expected?

I am using Unet model for semantic segmentation. I pass a batch of images to the model. The model is expected to output 0 or 1 for each pixel of the image (depending upon whether pixel is part of person object or not). 0 is for background, and 1 is for foreground.

I am trying to quantize the Unet model with Pytorch quantization apis for ARM architecture. I chose Qnnpack as quantization configuration. However the model accuracy is very poor for both Post training static quantization as well as QAT. The output is always a complete black image i.e. contains only background, no foreground for the person object. The model outputs bX2X224X224 i.e. batch_size X 2 channels (one for forground and one for background) X height X width.

Following is the output values for the center pixels of images - with original model and with quantized model.

As seen, the original model has varying output values. Hence when we apply Softmax on dim=1 (i,e, Channel dimension), we get some pixels as 0 and some as 1. This is as per expectation. However, the quantized model always outputs high positive for background and high negative for foreground channel. After applying softmax, all the pixels are background pixels, and the output is black images.

I need some help to find why this is happening. Is it a bug in qnnpack quantization routine?

The model source code is available at here. I used pretrained version of Unet with MobileNetV2 as backbone (check benchmark section in the readme from the source code link) - see here.

I first tried with Fbgemm configuration, and it worked fine in terms of accuracy - no major loss. However, when tried with qnnpack, I face above issues. Following is my code for QAT.

Am I missing anything?

One issue that we did encounter is that the upsampling layers of Unet use nn.ConvTranspose2d which is not supported for quantization. Hence before this layer, we need to dequantize tensors, apply nn.ConvTranspose2d, and then requantize for subsequent layers. Can this be reason for lower accuracy?

The following is the model after QAT training is completed for 30 epochs . . .

Any help is highly appreciated - thanks.

The text was updated successfully, but these errors were encountered: