---?image=https://source.unsplash.com/Oaqk7qqNh_c

+++ @snap[north]

@snapend @snap[west] @ul

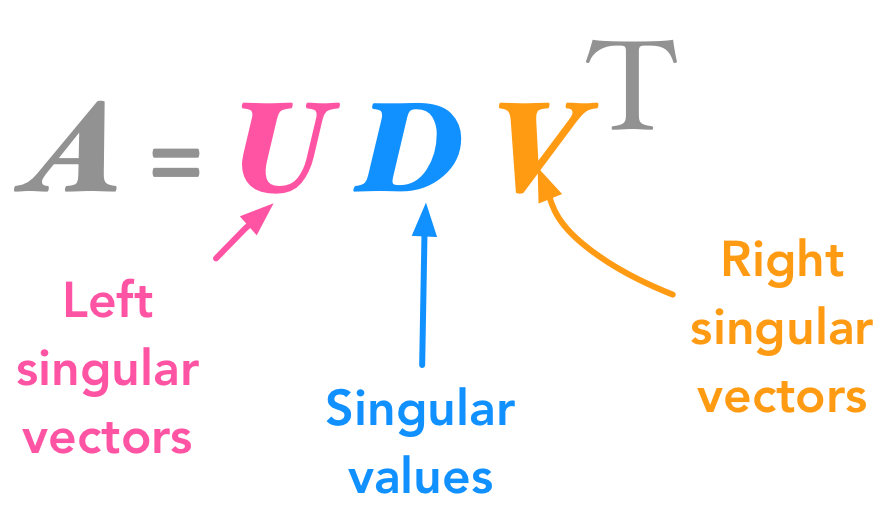

- Matrix Factorization

- SVD

- SGD

- surprise

- Our Implementation @ulend @snapend

---?image=https://source.unsplash.com/jgKgekpnmCI

+++?image=https://source.unsplash.com/jgKgekpnmCI

+++?image=https://source.unsplash.com/jgKgekpnmCI

+++?image=https://source.unsplash.com/jgKgekpnmCI

---?image=https://source.unsplash.com/tMvuB9se2uQ

+++?image=https://source.unsplash.com/tMvuB9se2uQ ### Stochastic Gradient Descent @ul * Randomly initialize `P` and `Q` * For a given `epoch`, minimize: +++?image=https://source.unsplash.com/tMvuB9se2uQ

+++?image=https://source.unsplash.com/tMvuB9se2uQ

- adjust

p(u)andq(i)at eachepochaccording to:

---?image=https://source.unsplash.com/iVVBVb2RqLc

+++?image=https://source.unsplash.com/iVVBVb2RqLc ### SVD vs SVD++ @div[top-50 fragment] @divend @div[bottom-50 fragment] @divend +++?image=https://source.unsplash.com/iVVBVb2RqLc ### SVD vs SVD++ Recommendations for user `276847` using...

@div[left-50 fragment]

surpise-svd:

@divend

@divend

@div[right-50 fragment]

surprise-svd++:

@divend

---?image=https://source.unsplash.com/JFeOy62yjXk

@divend

---?image=https://source.unsplash.com/JFeOy62yjXk

+++?image=https://source.unsplash.com/JFeOy62yjXk

+++?image=https://source.unsplash.com/JFeOy62yjXk

+++?image=https://source.unsplash.com/JFeOy62yjXk

+++?image=https://source.unsplash.com/JFeOy62yjXk

+++?image=https://source.unsplash.com/JFeOy62yjXk

---?image=https://source.unsplash.com/i0K3-IHiXYI

---?image=https://source.unsplash.com/i0K3-IHiXYI

@div[left-50 fragment] Pros @ul

- Users or Books features not necessary

- easy to evaluate and understand @ulend @divend

@div[right-50 fragment] Cons @ul

- cannot generate insights from the factors

- requires at least 100 data points

- Scaling

- Cold Start

- computationally intensive @ulend @divend +++?image=https://source.unsplash.com/i0K3-IHiXYI

@ul

- dataset with time stamps

- confidence interval

- Grid Search

- KFold Evaluation @ulend